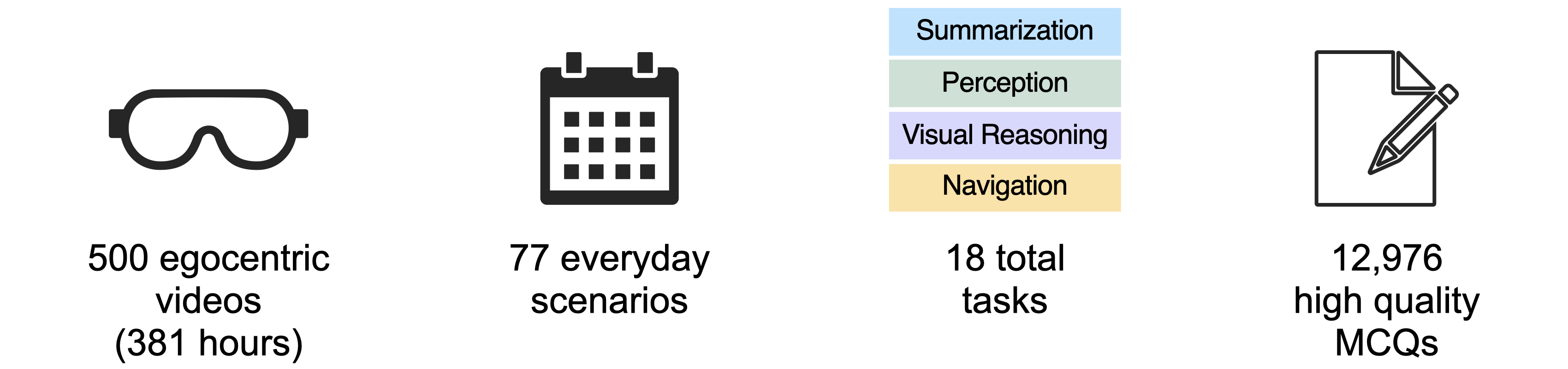

HourVideo at a Glance

We present HourVideo, a benchmark dataset for hour-long video-language understanding. Our dataset consists of a novel task suite comprising summarization, perception (recall, tracking), visual reasoning (spatial, temporal, predictive, causal, counterfactual), and navigation (room-to-room, object retrieval) tasks. HourVideo includes 500 manually curated egocentric videos from the Ego4D dataset, spanning durations of 20 to 120 minutes, and features 12,976 high-quality, five-way multiple-choice questions. Benchmarking results reveal that multimodal models, including GPT-4 and LLaVA-NeXT, achieve marginal improvements over random chance. In stark contrast, human experts significantly outperform the state-of-the-art long-context multimodal model, Gemini Pro 1.5 (85.0% vs. 37.3%), highlighting a substantial gap in multimodal capabilities.

|

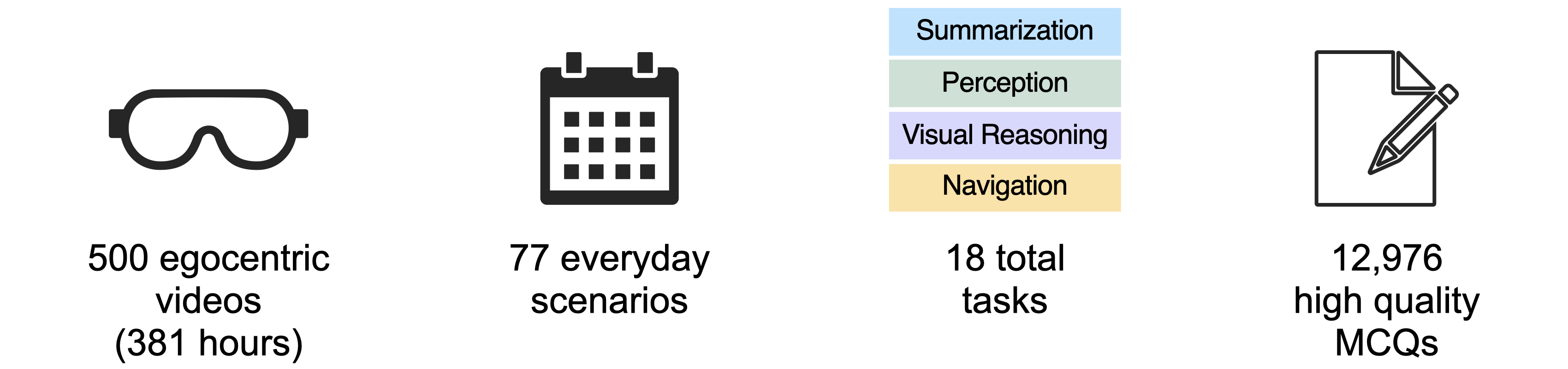

Summarization

|

|||

| Key Events/ Objects | Summarize the key interactions of the camera wearer in the [supermarket]. | ||

| Temporal Sequencing | Describe the sequence of activities performed by the camera wearer to [prepare the dessert]. | ||

| Compare/ Contrast | How did the camera wearer's activities in the [apartment] differ from those in the [restaurant]? | ||

|

Perception

|

|||

| Information Retrieval | |||

| • Factuall Recall | What [dairy products] did the camera wearer [pick up] in the [supermarket]? | ||

| • Sequence Recall | What did the camera wearer do immediately after [weighing tomatoes] at the [supermarket]? | ||

| • Temporal Distance | How long after starting to [eat pizza] did the camera wearer [dispose of the pizza box]? | ||

| Tracking | List the unique [individuals] the camera wearer interacted with at the [drugstore]. | ||

|

Visual Reasoning

|

|||

| Spatial | |||

| • Relationship | Where was the [microwave] placed in relation to the [stove] in the [kitchen]? | ||

| • Proximity | Is the [microwave] closer to the [fridge] compared to the [sink]? | ||

| • Layout | Which is the correct [IMAGE] depicting the layout of the camera wearer's [apartment]? | ||

| Temporal | |||

| • Duration | Which activity did the camera wearer spend more time on: [cooking] or [playing the piano]? | ||

| • Frequency | Did the camera wearer use the [circular saw] or [crosscut saw] more frequently to [cut wood]? | ||

| • Prerequisites | What preparation steps did the camera wearer take before [baking cookies]? | ||

| Predictive | What is the most likely activity the camera wearer will do next after [doing laundry]? | ||

| Causal | Why did the camera wearer [leave the garage for the second time]? | ||

| Counterfactual | What if the camera wearer used the [oven] to [cook mashed potatoes]? | ||

|

Navigation

|

|||

| Room-to-Room | How did the camera wearer get from the [building entrance] to the [apartment]? | ||

| Object Retrieval | How can the camera wearer retrieve the [TV remote] if they are in the [kitchen]? | ||

@inproceedings{chandrasegaran2024hourvideo,

title={HourVideo: 1-Hour Video-Language Understanding},

author={Chandrasegaran, Keshigeyan and Gupta, Agrim and Hadzic, Lea M. and Kota, Taran and

He, Jimming and Eyzaguirre, Cristobal and Durante, Zane and Li, Manling and Wu, Jiajun and Li, Fei-Fei},

booktitle = {Advances in Neural Information Processing Systems},

year={2024},

volume = {37},

}This work was in part supported by the Stanford Institute for Human-Centered Artificial Intelligence (HAI), ONR N00014-23-1-2355, and Microsoft. This work was partly supported by API credit grants from Google DeepMind and OpenAI. We thank Vishal Dharmadhikari for assistance with setting up Gemini 1.5 evaluations, Hashem Elezabi and Canon Grace Pham for help with data curation. We thank Chengshu (Eric) Li and Sanjana Srivastava for discussions on navigation questions, and Michael Poli, Daniel Y Fu, Jing Yu Koh, Stephen Tian, Tristan Thrush and Ngoc-Trung Tran for their feedback on the manuscript.